Want to share your screen? See the person you're talking to? Contact us via digital library desk! We will be with you shortly.

Monday-Friday

Want to share your screen? See the person you're talking to? Contact us via digital library desk! We will be with you shortly.

Monday-Friday

Good research data management is key to making research reproducible and accessible to others. But where to start? On this page we give you an overview of the various tasks typically involved with research data management, and have gathered resources where you can get further help (Research data management resources) and training (Research data management training).

Icons from Streamlinehq, CC BY 4.0 Licence.

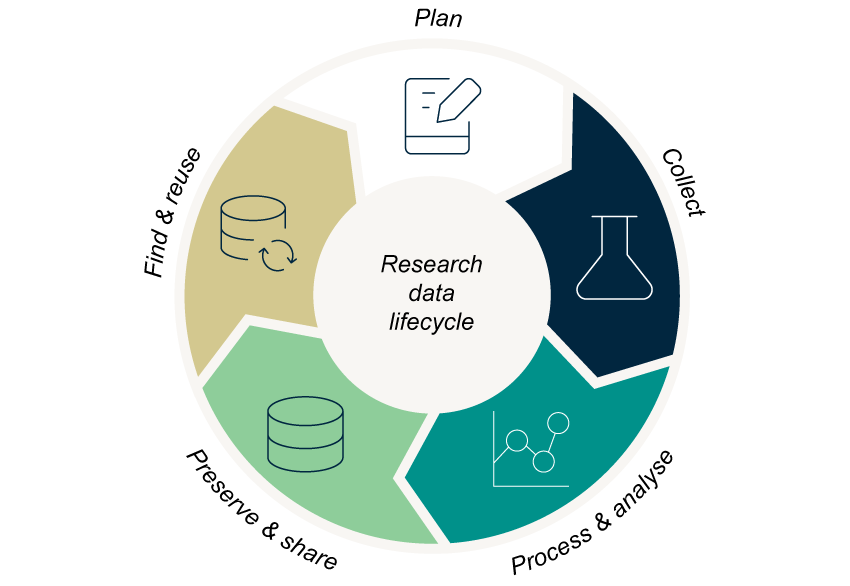

The research data lifecycle gives an overview of the different stages you go through with your data during a research project: plan, collect, process & analyse, preserve & share, and find & reuse. Each stage is associated with different data management tasks. Below we describe some typical data management tasks you would be involved in for each phase:

Phases of the research data lifecycle